[ad_1]

Josh, I’ve been hearing a ton about ‘AI-created art’ and looking at a whole good deal of certainly crazy-hunting memes. What is likely on, are the devices buying up paintbrushes now?

Not paintbrushes, no. What you’re viewing are neural networks (algorithms that supposedly mimic how our neurons sign every single other) qualified to create photographs from textual content. It is mainly a lot of maths.

Neural networks? Creating illustrations or photos from textual content? So, like, you plug ‘Kermit the Frog in Blade Runner’ into a computer and it spits out images of … that?

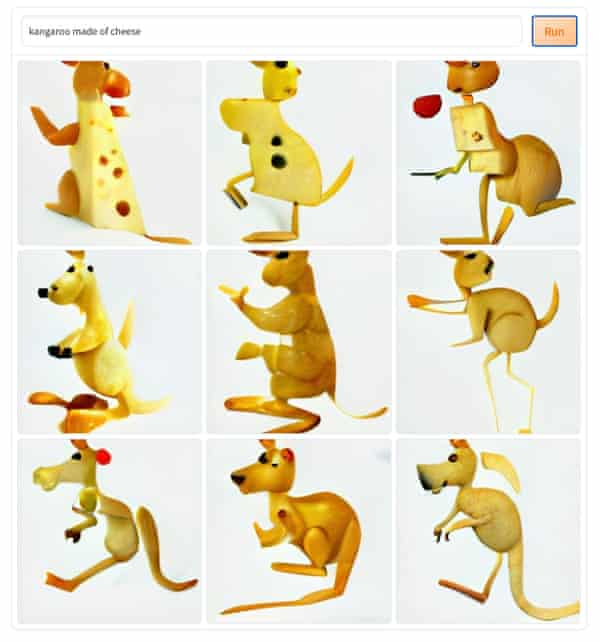

You aren’t imagining exterior the box ample! Guaranteed, you can develop all the Kermit illustrations or photos you want. But the rationale you’re hearing about AI art is for the reason that of the potential to make pictures from strategies no a person has at any time expressed ahead of. If you do a Google look for for “a kangaroo produced of cheese” you will not seriously obtain nearly anything. But here’s 9 of them produced by a design.

You outlined that it’s all a load of maths before, but – placing it as simply as you can – how does it in fact operate?

I’m no skilled, but essentially what they’ve done is get a laptop to “look” at hundreds of thousands or billions of pics of cats and bridges and so on. These are normally scraped from the web, alongside with the captions linked with them.

The algorithms determine designs in the photos and captions and eventually can start out predicting what captions and images go collectively. Once a design can forecast what an graphic “should” glimpse like dependent on a caption, the up coming phase is reversing it – making totally novel photographs from new “captions”.

When these applications are building new images, is it discovering commonalities – like, all my photos tagged ‘kangaroos’ are generally major blocks of shapes like this, and ‘cheese’ is generally a bunch of pixels that look like this – and just spinning up variations on that?

It is a bit a lot more than that. If you appear at this blog put up from 2018 you can see how considerably difficulties older models had. When given the caption “a herd of giraffes on a ship”, it produced a bunch of giraffe-coloured blobs standing in h2o. So the simple fact we are finding recognisable kangaroos and many varieties of cheese displays how there has been a big leap in the algorithms’ “understanding”.

Dang. So what’s modified so that the stuff it will make doesn’t resemble absolutely horrible nightmares any additional?

There’s been a variety of developments in procedures, as very well as the datasets that they train on. In 2020 a organization named OpenAi unveiled GPT-3 – an algorithm that is in a position to crank out text eerily close to what a human could write. One particular of the most hyped text-to-image building algorithms, DALLE, is primarily based on GPT-3 additional just lately, Google launched Imagen, employing their possess text types.

These algorithms are fed enormous quantities of knowledge and compelled to do hundreds of “exercises” to get improved at prediction.

‘Exercises’? Are there still actual people today included, like telling the algorithms if what they’re creating is correct or mistaken?

Essentially, this is one more significant improvement. When you use one of these products you’re likely only looking at a handful of the pictures that have been truly created. Comparable to how these designs ended up to begin with educated to predict the most effective captions for visuals, they only demonstrate you the pictures that greatest match the text you gave them. They are marking on their own.

But there is still weaknesses in this era course of action, ideal?

I can not tension ample that this isn’t intelligence. The algorithms really do not “understand” what the phrases suggest or the photos in the identical way you or I do. It’s sort of like a most effective guess based on what it is “seen” in advance of. So there’s pretty a couple of constraints each in what it can do, and what it does that it in all probability should not do (this kind of as likely graphic imagery).

Okay, so if the equipment are generating photos on request now, how numerous artists will this place out of operate?

For now, these algorithms are mostly restricted or expensive to use. I’m nonetheless on the ready list to check out DALLE. But computing electricity is also finding less costly, there are numerous enormous graphic datasets, and even frequent individuals are developing their possess types. Like the 1 we used to build the kangaroo photos. There is also a variation on the internet referred to as Dall-E 2 mini, which is the just one that people today are working with, exploring and sharing on-line to generate every thing from Boris Johnson ingesting a fish to kangaroos manufactured of cheese.

I doubt anyone is familiar with what will occur to artists. But there are nevertheless so quite a few edge conditions in which these versions crack down that I wouldn’t be relying on them solely.

I have a looming emotion AI produced art will devour the economic sustainability of becoming an illustrator

not simply because artwork will be changed by AI as a total – but due to the fact it’ll be so considerably more cost-effective and superior sufficient for most people today and corporations

— Freya Holmér (@FreyaHolmer) June 2, 2022

Are there other difficulties with producing visuals centered purely on sample-matching and then marking by themselves on their responses? Any questions of bias, say, or regrettable associations?

Some thing you will recognize in the corporate bulletins of these versions is they have a tendency to use innocuous examples. Lots of created visuals of animals. This speaks to one particular of the significant concerns with using the world wide web to educate a sample matching algorithm – so much of it is completely terrible.

A couple of a long time back a dataset of 80m images employed to teach algorithms was taken down by MIT researchers since of “derogatory phrases as categories and offensive images”. A little something we have found in our experiments is that “businessy” phrases look to be connected with produced pictures of guys.

So suitable now it’s just about great more than enough for memes, and still would make weird nightmare pictures (in particular of faces), but not as a great deal as it utilised to. But who knows about the potential. Thanks Josh.

[ad_2]

Supply backlink